•

the Blog

On PhD advisory committees

By Guillaume Filion, filed under

motivation,

PhD.

• 08 February 2023 •

Joana, year 1

Joana is quite tense, she smiles a little too much. She brought some cookies for the committee, which I find very nice. I eat a cookie and thank her for the attention. I hope it helps her relax. A few minutes later, the chair of the committee thanks everybody for coming and goes through the protocol: Joana will give a forty minute presentation of her work, then we will discuss her project together, then she will leave the room so that we can speak with her adviser in private, and finally she will come back into the room without her adviser so that we can speak with her in private. “You can start whenever you are ready Joana” says the chair. Joana breathes deeply and she starts.

It is the middle of the presentation. Joana is still answering the question of the chair. Her answer does not make sense to me but I nod reassuringly. I am curious as to whether her adviser will correct her. I met him at a congress but I do not know his supervision style.

“What Joana meant to say is that...”

“A protective micromanager” I think to myself. This...

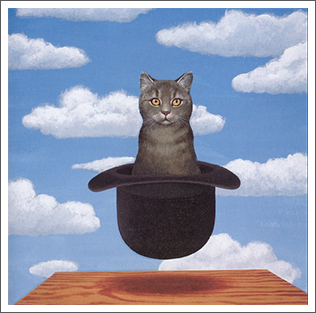

Fisher information (with a cat)

By Guillaume Filion, filed under

Immanuel,

bias–variance trade-off,

Fisher information,

dialogue.

• 13 December 2022 •

It is still summer but the days are getting shorter (p < 0.05). Edgar and Sofia are playing chess, Immanuel purrs in a sofa next to them. Edgar has been holding his head for a while, thinking about his next move. Sofia starts:

It is still summer but the days are getting shorter (p < 0.05). Edgar and Sofia are playing chess, Immanuel purrs in a sofa next to them. Edgar has been holding his head for a while, thinking about his next move. Sofia starts:

“Something bothers me Immanuel. In the last post, you told us that Fisher information could be defined as a variance, but that is not what I remember from my classes of mathematical statistics.”

“What do you remember, Sofia?”

“Our teacher said it was the curvature of the log-likelihood function around the maximum. More specifically, consider a parametric model $(f(X;\theta))$ where $(X)$ is a random variable and $(\theta)$ is a parameter. Say that the true (but unknown) value of the parameter is $(\theta^*)$. The first terms of the Taylor expansion of the log-likelihood $(\log f(X;\theta))$ around $(\theta^*)$ are

$$\log f(X;\theta^*) + (\theta - \theta^*) \cdot \frac{\partial}{\partial \theta} \log f(X;\theta^*) + \frac{1}{2}(\theta - \theta^*)^2 \cdot \frac{\partial^2}{\partial \theta^2} \log f(X;\theta^*).$$

Now compute the expected value and obtain the approximation below. We call it $(\varphi(\theta))$ to emphasize that it is...

Does science need statistical tests?

By Guillaume Filion, filed under

p-hacking,

dialogue,

statistics.

• 14 October 2022 •

Some time ago, my colleague John asked for help with the statistics for one of his manuscripts.

Some time ago, my colleague John asked for help with the statistics for one of his manuscripts.

“We have this situation where we knocked out a gene with CRISPR and I want to test if it affects viability. I know that you are supposed to use a non-parametric test when the sample is small, but I have heard that you can still use the t test if the variables are Gaussian. So now I am genuinely confused. Which test should I use?”

“I agree. It’s confusing. Why do you want to make a statistical test by the way?”

“Same as everyone. I want to know if the effect is significant. Plus, I’m hundred percent sure that the reviewers will ask for it.”

“I see. I will rephrase my question then. What decision do you have to make?”

“I can give you all the details of our experiments if you want, but I’m surprised. Nobody has ever asked me that before and I thought that experimental details do not really matter so much for a statistical test. So what kind of details do you need?”

“Nothing in particular. I just want to know whether you...

Journal clubs, ranked from worst to best

By Guillaume Filion, filed under

science,

journal club,

opinion.

• 07 March 2022 •

One of the most difficult tasks for an academic is to know the literature. Most labs run some kind of literature discussion, which are usually referred to as “journal clubs” in biology. We tested several variants when I created my lab in 2012 and we have learned a good deal about what works and what does not. So here is my personal view on the different types of journal clubs, from worst to best.

5. Online discussion forum

Live meetings have several disadvantages: they disrupt the workflow of experiments, there are no records, and some people speak too much. I reasoned that a good way of addressing all this would be to put up an online forum where we could upload a paper and share our thoughts. I invited all the ~300 researchers of the institute to participate with the plan to go to social media if it gained momentum.

That did not work at all. We never got past the first paper, which had only one comment (mine). It was not appealing at all to formalize your thoughts in order to write a paragraph and post it for people who work next door. The written form is...

A gentle introduction to the Cramér-Rao lower bound (with a cat)

By Guillaume Filion, filed under

Immanuel,

dialogue,

Fisher information.

• 22 November 2021 •

It is summer, Edgar and Sofia are comfortably sitting on the terrace, watching the beautiful light of the end of the day. Edgar starts:

It is summer, Edgar and Sofia are comfortably sitting on the terrace, watching the beautiful light of the end of the day. Edgar starts:

“Let’s play a game to see who is the better statistician! Immanuel my cat will give each of us a secret number strictly greater than zero. The other person will have to guess it.”

“How are we going to guess?”

“Let’s say that the secret numbers are the means of some Poisson variable. We generate samples at random. The one who gets the closest estimate by dinner time wins.”

“That sounds easy! Will Immanuel give us the same number?”

“What is the fun in that? Let’s ask him to give two different numbers. You know what to do. Just give me your first sample whenever you are ready and I will try to guess your secret number.”

Immanuel whispers something in the ear of Sofia and then does the same with Edgar. Sofia opens her laptop and after a few keystrokes she says “The first number I have for you is 1.”

“OK, I give up. You win.”

Sofia is puzzled at first, but then she notices how Immanuel is rolling...

A tutorial on t-SNE (3)

By Guillaume Filion, filed under

series: focus on,

statistics,

data visualization,

bioinformatics.

• 22 September 2021 •

This post is the third part of a tutorial on t-SNE. The first part introduces dimensionality reduction and presents the main ideas of t-SNE. The second part introduces the notion of perplexity. The present post covers the details of the nonlinear embedding.

On the origins of t-SNE

If you are following the field of artificial intelligence, the name Geoffrey Hinton should sound familiar. As it turns out, the “Godfather of Deep Learning” is the author of both t-SNE and its ancestor SNE. This explains why t-SNE has a strong flavor of neural networks. If you already know gradient-descent and variational learning, then you should feel at home. Otherwise no worries: we will keep it relatively simple and we will take the time to explain what happens under the hood.

We have seen previously that t-SNE aims to preserve a relationship between the points, and that this relationship can be thought of as the probability of hopping from one point to the other in a random walk. The focus of this post is to explain what t-SNE does to preserve this relationship in a space of lower dimension.

The Kullback-Leibler...

How to teach yourself math

By Guillaume Filion, filed under

mathematics,

motivation,

learning.

• 03 May 2021 •

I recently left Barcelona after spending nearly nine years in the company of wonderful people who supported me and helped me carry forward my teaching and my research. At a goodbye dinner, I was surprised that a friend insisted that I should explain how I learned math, that it would be useful and inspirational.

I recently left Barcelona after spending nearly nine years in the company of wonderful people who supported me and helped me carry forward my teaching and my research. At a goodbye dinner, I was surprised that a friend insisted that I should explain how I learned math, that it would be useful and inspirational.

I had never thought about it. I guess what he meant is that I should explain why formal computational approaches are so important for me, given that I do not have any diploma in mathematics or statistics.

Doing the math

I have been doing one hour of math every day for 23 years. I will get to the how, but for now I want to discuss the why. Sticking to this schedule rigorously means that you do 365 hours of math every year. At the time I studied (in biology), you had to take ~500 hours of class per year for three years in order to graduate, so it was considered that you reach the graduate level after you study ~1500 hours. With one hour per day, it will take you a little more than four years.

At the graduate level, we had about...

The most important quality of a scientist

By Guillaume Filion, filed under

science,

management,

opinion.

• 15 September 2020 •

When I established my lab and started to recruit people, I thought that it would be interesting to gather some information about what makes a good or a bad scientist. To this end, I designed a short questionnaire with eight questions. There was no right or wrong, nor even a preferred answer. Those were just questions to help me know the candidates better.

When I established my lab and started to recruit people, I thought that it would be interesting to gather some information about what makes a good or a bad scientist. To this end, I designed a short questionnaire with eight questions. There was no right or wrong, nor even a preferred answer. Those were just questions to help me know the candidates better.

The first question was “What is the most important quality of a scientist?” I had no particular expectation. Actually, I did not even know my own answer to this question. As it turned out, most candidates answered that it was either creativity or persistence.

If you have been in science for even a short while, you know why this makes sense. We have complicated problems to solve, so creativity and persistence are important. Yet, I was not convinced that a good scientist is someone who is either very creative or very persistent. The reason is that neither of these qualities defines a scientist. Artists, politicians, business people, social workers and pretty much everyone else greatly benefits from being creative or persistent.

Having spent more time with scientists, I came to find the answer to my...

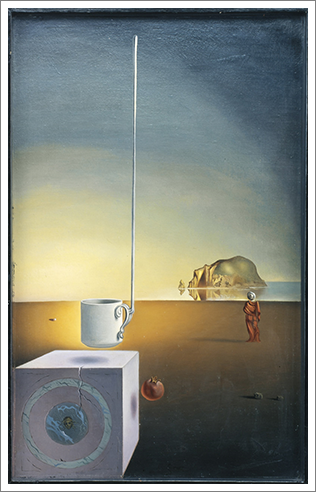

Scientific models

By Guillaume Filion, filed under

science,

model,

dialogue.

• 01 January 2020 •

Literature discussions were usually very quiet in the laboratory, but somehow, this article had sparked a debate. Linda thought it was very bad. Albert liked it very much. Kate, the PI, was undecided. At some point the discussion stalled, so Kate made a move to wrap up.

“So, Linda, why do you think the article is bad?”

“Because they are missing a thousand controls.”

“OK. Albert, why do you like this article?”

“I find their model in figure 6 really cool. Actually, if it is true, it…”

“Precisely my point!” interrupted Linda. “It’s pure speculation!”

Kate intervened.

“Albert, you describe figure 6 as a model. What makes it a model?”

Albert spoke after a pause.

“It’s an idealized summary of their findings.”

“Fantasized you mean!” replied Linda.

Kate ignored the point and turned to Linda.

“Linda, do you think that figure 6 is a model?”

“Of course not! It’s just speculation.”

“Now I have a question for you Albert: what is the difference between a model and a summary?”

While Albert was thinking, Kate continued.

“And I also have a question for you Linda: what is the difference between speculation and assumption?”

Now they were...

A tutorial on t-SNE (2)

By Guillaume Filion, filed under

perplexity,

t-SNE,

entropy.

• 16 December 2019 •

In this post I explain what perplexity is and how it is used to parametrize t-SNE. This post is the second part of a tutorial on t-SNE. The first part introduces dimensionality reduction and presents the main ideas of t-SNE. This is where you should start if you are not already familiar with t-SNE.

What is perplexity?

Before you read on, pick a number at random between 1 and 10 and ask yourself whether I can guess it. It looks like my chances are 1 in 10 so you may think “no there is no way”. In fact, there is a 28% chance that you chose the number 7, so my chances of guessing are higher than you may have thought initially. In this situation, the random variable is somewhat predictable but not completely. How could we quantify that?

To answer the questions, let us count the possible samples from this distribution. We ask $(N)$ people to choose a number at random between 1 and 10 and we record their answers $((x_1, x_2, \ldots, x_N))$. The number 1 shows up with probability $(p_1 = 0.034)$ so the total in the sample is approximately $(n_1...

Older »