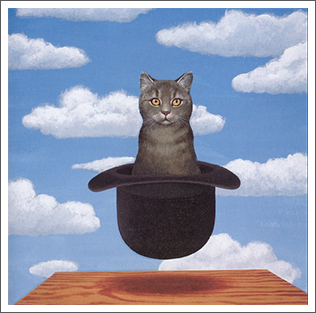

A gentle introduction to the Cramér-Rao lower bound (with a cat)

By Guillaume Filion, filed under

Immanuel,

dialogue,

Fisher information.

• 22 November 2021 •

It is summer, Edgar and Sofia are comfortably sitting on the terrace, watching the beautiful light of the end of the day. Edgar starts:

It is summer, Edgar and Sofia are comfortably sitting on the terrace, watching the beautiful light of the end of the day. Edgar starts:

“Let’s play a game to see who is the better statistician! Immanuel my cat will give each of us a secret number strictly greater than zero. The other person will have to guess it.”

“How are we going to guess?”

“Let’s say that the secret numbers are the means of some Poisson variable. We generate samples at random. The one who gets the closest estimate by dinner time wins.”

“That sounds easy! Will Immanuel give us the same number?”

“What is the fun in that? Let’s ask him to give two different numbers. You know what to do. Just give me your first sample whenever you are ready and I will try to guess your secret number.”

Immanuel whispers something in the ear of Sofia and then does the same with Edgar. Sofia opens her laptop and after a few keystrokes she says “The first number I have for you is 1.”

“OK, I give up. You win.”

Sofia is puzzled at first, but then she notices how Immanuel is rolling on the floor and cannot stop laughing. “Oh I see... Thanks for conceding defeat Edgar. For the sake of formalities, my guess is that your number is ten to the minus twenty, now is a good time to go for dinner and I win. ”

Let us watch it again in slow motion

Edgard implicitly assumed that every number is equally hard to guess, but his cat Immanuel decided to show him that there is more to being a good statistician at winning this game. Some numbers are intrinsically harder to guess than others.

To make his point, Immanuel gave Edgar a number that is very close to zero. Say 0.000398 for instance. Now Edgar has to generate Poisson samples with this mean and he realizes that more than 99.9% of the time, the number he will give to Sofia will be 0. Even after one attempt, Sofia’s guess will be 0 (or something very close because she knows that the number is positive) because that is the rational thing to say. And that will be very close to the actual number...

Now, Edgar’s only chance to win is that Immanuel gave Sofia an even smaller number. If this is the case, then Sofia will also produce a long series of 0, but her first sampled number is 1. Edgar understands that Sofia’s number is not that close to 0. In time, he might obtain a good estimate, but not within a margin smaller than 0.000398, which is the precision that Sofia will get from her first attempt.

There is no hope for him to win. He resigns.

Sofia, who is a good statistician understands the trick that the cat played on his master. She knows that Edgar’s number must be very close to 0, otherwise he would keep playing.

The Cramér-Rao lower bound

The high-level intuition behind the concept of Fisher information is that some estimation problems are more difficult than others, even within the same model, such as the Poisson or the Gaussian distribution.

Once we settle on an unbiased estimator, the expected error is usually measured by its variance. The Cramér-Rao lower bound is the lowest possible variance of any unbiased estimator within the model. If we have an estimator with this variance, there is no need to look for a better one: there is none.

In the case of the Poisson distribution with parameter $(\lambda)$, the Cramér-Rao bound is $(\lambda/n)$, where $(n)$ is the sample size (we will show this below). The sample mean is an unbiased estimator of $(\lambda)$ and its variance is $(\lambda/n)$ if sampling is independent, so this is the best possible estimator. Since the variance $(\lambda/n)$ is small when $(\lambda)$ is small, the estimator is better for small values of $(\lambda)$ than for large values.

This is what the example above demonstrates: as the Cramér-Rao bound becomes smaller, estimation problems become easier — they can even become trivially simple. In summary, Sofia won because Immanuel gave her a problem with a tiny Cramér-Rao bound. In any event, with the rules set by Edgar, the winner is most likely going to be the person with the problem that has the lowest Cramér-Rao bound.

Derivation of the bound

I always wondered how this result was discovered. Even though the derivation is straightforward, it is not so intuitive — feel free to fill me in if you know about the history of this result.

Say that we have an unbiased estimator $(g(X)$) of the parameter $(\theta)$, where $(X)$ is a sample with probability density $(f(X;\theta))$. We start from the definition, so we obtain $$\int g(X) f(X;\theta) dX = \theta.$$

Now we are looking for a way to use some inequality on integrals, but since nothing interesting appears in this form, we try to generate some interesting products, so we differentiate with respect to $(\theta)$. $$\int g(X) \cdot \frac{\partial f(X;\theta)}{\partial \theta} dX = \int g(X) \cdot \frac{\partial \log f(X;\theta)}{\partial \theta} f(X;\theta) dX = 1.$$

Now we have the expectancy of a product and we can use the Cauchy–Schwarz inequality because the expectation with respect to $(f(X;\theta))$ defines a scalar product over functions.

$$1 = \left[ E \left\{ g(X) \cdot \frac{\partial \log f(X;\theta)}{\partial \theta} \right\} \right]^2 \leq E \left\{g(X)^2\right\} \cdot E \left\{ \left[ \frac{\partial \log f(X;\theta)}{\partial \theta} \right]^2\right\} .$$

We are getting somewhere: the right hand side is almost the variance of the estimator, times a strange term that looks like the variance of something. The left hand side is the covariance of the estimator and that something, and because that something has expectancy 0 (we will prove this in a minute), we can replace $(g(X))$ with $(g(X) - \theta)$ without changing the result. In the end, we obtain the Cramér-Rao lower bound as $$E \left\{\left(g(X)-\theta\right)^2\right\} \geq \frac{1}{E \left\{ \left[ \frac{\partial \log f(X;\theta)}{\partial \theta} \right]^2\right\}} = \frac{1}{I(\theta)}.$$

The term $(I(\theta))$ is a version of the Fisher information and we could consider that the derivation above is a motivation for its definition. Among other things, it is the variance of a random variable $(S_\theta(X) = \partial \log f(X;\theta) / \partial \theta)$ called the score and that has expectancy equal to 0. Indeed, $$E\left( S_\theta(X) \right) = \int \frac{\partial \log f(X;\theta)}{\partial \theta } f(X;\theta) dX = \frac{\partial}{\partial \theta} \int f(X;\theta) dX = 0.$$

The score is not a true statistic in the sense that it cannot be computed in practice: the expression depends on $(\theta)$, which is unknown. It is still useful for computations, just like probability density functions.

Let us give an example with the Poisson distribution and a sample of size $(n = 1)$. In this case, we replace $(f(X;\theta))$ with the discrete distribution over integers $(e^{-\lambda}\cdot \frac{\lambda^x}{x!} )$. The score is equal to $(-1 + x/\lambda)$, and because $(E(x) = \lambda)$ we can verify that its expectancy is 0. The square of the score is $(1 - 2x/\lambda + x^2/\lambda^2)$ and since $(E(x^2) = \lambda(1 + \lambda))$, the variance of the score comes as $(1 / \lambda)$.

The Fisher information for a Poisson variable is $(1 / \lambda)$ and the Cramér-Rao lower bound is $(\lambda)$. This is the variance of the sample mean when $(n = 1)$, which is then the best unbiased estimator. For a larger sample size $(n)$, assuming sampling independence, the score becomes a sum of $(n)$ identical terms, so its variance is $(n / \lambda)$ and the Cramér-Rao lower bound is $(\lambda / n)$.

Conclusion

The Cramér-Rao lower bound is usually introduced as a property of the Fisher information. I always found the definition of the Fisher information non-intuitive. To me, it makes more sense to show that unbiased estimators cannot go below a certain variance, and give that variance some name or some special meaning.

The Fisher information has some more direct interpretation but it takes some care to explain it step by step. I will present this direct interpretation in the next post.

« Previous Post | Next Post »

blog comments powered by Disqus