•

the Blog

A tutorial on t-SNE (2)

By Guillaume Filion, filed under

perplexity,

t-SNE,

entropy.

• 16 December 2019 •

In this post I explain what perplexity is and how it is used to parametrize t-SNE. This post is the second part of a tutorial on t-SNE. The first part introduces dimensionality reduction and presents the main ideas of t-SNE. This is where you should start if you are not already familiar with t-SNE.

What is perplexity?

Before you read on, pick a number at random between 1 and 10 and ask yourself whether I can guess it. It looks like my chances are 1 in 10 so you may think “no there is no way”. In fact, there is a 28% chance that you chose the number 7, so my chances of guessing are higher than you may have thought initially. In this situation, the random variable is somewhat predictable but not completely. How could we quantify that?

To answer the questions, let us count the possible samples from this distribution. We ask $(N)$ people to choose a number at random between 1 and 10 and we record their answers $((x_1, x_2, \ldots, x_N))$. The number 1 shows up with probability $(p_1 = 0.034)$ so the total in the sample is approximately $(n_1...

Focus on: the Kullback-Leibler divergence

By Guillaume Filion, filed under

Kullback-Leibler divergence,

series: focus on.

• 23 June 2019 •

The story of the Kullback-Leibler divergence starts in a top secret research facility. In 1951, right after the war, Solomon Kullback and Richard Leibler were working as cryptanalysts for what would soon become the National Security Agency. Three years earlier, Claude Shannon had shaken the academic world by formulating the modern theory of information. Kullback and Leibler immediately saw how this could be useful in statistics and they came up with the concept of information for discrimination, now known as relative entropy or Kullback-Leibler divergence.

The concept was introduced in an original article, and later expanded by Kullback in the book Information Theory and Statistics. It has now found applications in most aspects of information technologies, and most prominently artificial neural networks. In this post, I want to give an advanced introduction on this concept, hoping to make it intuitive.

Discriminating information

The original motivation given by Kullback and Leibler is still the best way to expose the main idea, so let us follow their rationale. Suppose that we hesitate between two competing hypotheses $(H_1)$ and $(H_2)$. To make things more concrete, say that we have an encrypted message $(x)$ that may come from two possible...

On open peer review

By Guillaume Filion, filed under

opinion,

peer review,

science.

• 01 October 2018 •

Among the things that make science unique is the fact that scientists agree on what they say. There can be disagreement, but it is always understood as a temporary state, because either someone will be proven wrong, or new information will eventually reconcile everyone. Agreement is enforced in many ways, but pre-publication peer review is currently the dominant process, and it has been for over a century.

It is surprising that so little information is available about the efficiency of the peer review process. For instance, there is barely any justification as to why it is by default anonymous. Even more surprising is that people who express their opinion in this regard do not back it up with empirical evidence, because there is essentially no data. Let me clarify something: I do not have any data to show. But I have been signing my reviews for over seven years and I am happy to share this experience with those who wonder what happens when you do this.

How did it start?

I was first contacted by editors to review manuscripts at the time Stack Overflow eclipsed nearly all the forums on the Internet. The forums were supposed...

A tutorial on t-SNE (1)

By Guillaume Filion, filed under

statistics,

series: focus on,

bioinformatics,

data visualization.

• 22 August 2018 •

In this tutorial, I would like to explain the basic ideas behind t-distributed Stochastic Neighbor Embedding, better known as t-SNE. There are tons of excellent material out there explaining how t-SNE works. Here, I would like to focus on why it works and what makes t-SNE special among data visualization techniques.

If you are not comfortable with formulas, you should still be able to understand this post, which is intended to be a gentle introduction to t-SNE. The next post will peek under the hood and delve into the mathematics and the technical detail.

Dimensionality reduction

One thing we all agree on is that we each have a unique personality. And yet it seems that five character traits are sufficient to sketch the psychological portrait of almost everyone. Surely, such portraits are incomplete, but they capture the most important features to describe someone.

The so-called five factor model is a prime example of dimensionality reduction. It represents diverse and complex data with a handful of numbers. The reduced personality model can be used to compare different individuals, give a quick description of someone, find compatible personalities, predict possible behaviors etc. In many...

Pro recruitment tip: ask your mum!

By Guillaume Filion, filed under

recruitment,

leadership.

• 09 July 2018 •

The key of success is to choose the right people for your team. That’s what everybody will tell you. But if you ask “how?”, things will get a little more complicated. Practitioners admit that this is a tough problem, and you cannot expect to win all the time. Google attempted to answer the question with the so-called Project Aristotle. After sifting a huge amount of data through the best algorithms, they concluded that you can ignore gender, age, culture, education and pretty much everything else... What makes a good team is not good people, it is good interactions.

This is great news, but it does not really answer the question of how to choose the right people for your team. How does one know that a candidate will nicely interact with the team? Those were the questions I had in mind when I had to assemble my scientific team. Like almost everyone, I did not have any kind of training in this regard and I was not really prepared. So I sought advice from everybody who would care to give me their opinion, may they be colleagues, friends or family members. In the process, I did...

This is great news, but it does not really answer the question of how to choose the right people for your team. How does one know that a candidate will nicely interact with the team? Those were the questions I had in mind when I had to assemble my scientific team. Like almost everyone, I did not have any kind of training in this regard and I was not really prepared. So I sought advice from everybody who would care to give me their opinion, may they be colleagues, friends or family members. In the process, I did...

The curse of large numbers (Big Data considered harmful)

By Guillaume Filion, filed under

statistics,

p-values,

big data,

hypothesis testing.

• 10 February 2018 •

According to the legend, King Midas got the sympathy of the Greek god Dionysus who offered to grant him a wish. Midas asked that everything he touches would turn into gold. At first very happy with his choice, he realized that he had brought on himself a curse, as his food turned into gold before he could eat it.

According to the legend, King Midas got the sympathy of the Greek god Dionysus who offered to grant him a wish. Midas asked that everything he touches would turn into gold. At first very happy with his choice, he realized that he had brought on himself a curse, as his food turned into gold before he could eat it.

This legend on the theme “be careful what you wish for” is a cautionary tale about using powers you do not understand. The only “powers” humans ever acquired were technologies, so one can think of this legend as a warning against modernization and against the fact that some things we take for granted will be lost in our desire for better lives.

In data analysis and in bioinformatics, modernization sounds like “Big Data”. And indeed, Big Data is everything we asked for. No more expensive underpowered studies! No more biased small samples! No more invalid approximations! No more p-hacking! Data is good, and more data is better. If we have too much data, we can always throw it away. So what can possibly go wrong with Big Data?

Enter the Big Data world and everything you touch turns...

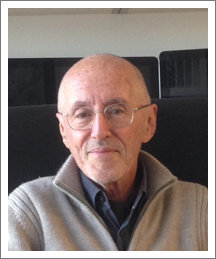

Interview with Miguel Beato

By Guillaume Filion, filed under

interview.

• 07 January 2018 •

Miguel Beato is one of my favourite scientists. We met at the CRG in Barcelona, where we both work and often collaborate. One of the most interesting things about Miguel is that for more than fifty years, he remained a pioneer in the field of steroid hormones. He embraced every scientific revolution and he keeps pushing scientific progress forward with unmatched panache. I figured I would collect some of his thoughts on the future of science and other topics that I enjoy talking with him about.

Miguel Beato is one of my favourite scientists. We met at the CRG in Barcelona, where we both work and often collaborate. One of the most interesting things about Miguel is that for more than fifty years, he remained a pioneer in the field of steroid hormones. He embraced every scientific revolution and he keeps pushing scientific progress forward with unmatched panache. I figured I would collect some of his thoughts on the future of science and other topics that I enjoy talking with him about.

Guillaume Filion: What do you think has been the most important revolution in science since the beginning of your career?

Miguel Beato: The transition from analysing single events to global events in the cell. Actually, changing the microscope for statistics.

GF: Why is this so important?

MB: Because we can for the first time look at cells, even at organisms. We have a tool to measure changes and variations that was not available before. This is what enables the kind of approach that we all have at CRG. The only way to study processes is to use networks, circuitry of things you know are connected, and try to understand things this way...

A tutorial on Burrows-Wheeler indexing methods (3)

By Guillaume Filion, filed under

full text indexing,

Burrows-Wheeler transform,

suffix array,

series: focus on,

bioinformatics.

• 14 May 2017 •

The code is written in a very naive style, so you should not use it as a reference for good C code. Once again, the purpose is to highlight the mechanisms of the algorithm, disregarding all other considerations. That said, the code runs so it may be used as a skeleton for your own projects.

The code is available for download as a Github gist. As in the second part, I recommend playing with the variables, and debugging it with gdb to see what happens step by step.

Constructing the suffix array

First you should get familiar with the first two parts of the tutorial in order to follow the logic of the code below. The file learn_bwt_indexing_compression.c does the same thing as in the second part...

A tutorial on Burrows-Wheeler indexing methods (2)

By Guillaume Filion, filed under

full text indexing,

Burrows-Wheeler transform,

suffix array,

series: focus on,

bioinformatics.

• 07 May 2017 •

It makes little sense to implement a Burrows-Wheeler index in a high level language such as Python or JavaScript because we need tight control of the basic data structures. This is why I chose C. The purpose of this post is not to show how Burrows-Wheeler indexes should be implemented, but to help the reader understand how it works in practice. I tried to make the code as clear as possible, without regard for optimization. It is only a plain, vanilla, implementation.

The code runs, but I doubt that it can be used for anything else than demonstrations. First, it is very naive and hard to scale up. Second, it does not use any compression nor down...

When scientific models fail

By Guillaume Filion, filed under

science,

models,

evolution.

• 13 April 2017 •

Scientific models are more of an art than a science. It is much easier to recognize a good scientific model than to make one of our own. Like for an art, the best way to learn is to look at the work from the masters and take inspiration from them. One of the crown jewels of modern science is undoubtedly Darwin’s Theory of Evolution. I recently realized that I had no idea how Darwin stood against creationism and how he defended his view in regard of the doxa of his time. Digging into this topic turned out to be one the most important lessons I learned about the scientific method... and the lack of it.

Scientific models are more of an art than a science. It is much easier to recognize a good scientific model than to make one of our own. Like for an art, the best way to learn is to look at the work from the masters and take inspiration from them. One of the crown jewels of modern science is undoubtedly Darwin’s Theory of Evolution. I recently realized that I had no idea how Darwin stood against creationism and how he defended his view in regard of the doxa of his time. Digging into this topic turned out to be one the most important lessons I learned about the scientific method... and the lack of it.

Darwin touches vividly upon creationism at the end of “On the Origin of Species”. In his own words, he claims that

It is so easy to hide our ignorance under such expressions as the ‘plan of creation’, ‘unity of design’, etc, and to think that we give an explanation when we only restate a fact.

What strikes me here is that he does not accuse the ‘plan of creation’ and the ‘unity of design’ of not being proper scientific concepts. The real...