•

the Blog

100% non functional

By Guillaume Filion, filed under

intelligent design,

junk DNA,

ENCODE.

• 05 May 2013 •

Panglossian genomics

As most French students of my generation, I had to study Candide, a short philosophical novella written by Voltaire. Back then, I was convinced that Voltaire was an arrogant prick, and I never imagined that his dumb criticism of Leibniz’s theory of pre-established harmony, which he barely understood, would ever echo in my work as a biologist.

As most French students of my generation, I had to study Candide, a short philosophical novella written by Voltaire. Back then, I was convinced that Voltaire was an arrogant prick, and I never imagined that his dumb criticism of Leibniz’s theory of pre-established harmony, which he barely understood, would ever echo in my work as a biologist.

But here we are, years have passed, I have made peace with Voltaire, and the ENCODE consortium has issued its major and controversial statement that they find “biochemical functions for 80% of the genome”. As the arguments and the comments flow on the blogs and in the academic press, I cannot help thinking about the words of Dr. Pangloss – incarnating narrow optimism.

Observe, for instance, the nose is formed for spectacles, therefore we wear spectacles. The legs are visibly designed for stockings, accordingly we wear stockings.

What I will call the Panglossian reading of the “80% functional” statement above is the idea that 80% of the genome is meant to be the way it is. The architecture of a given locus is somehow designed to produce what happens there (transcription, transcription enhancing, transcription factor binding etc). Notice...

One shade of authorship attribution

By Guillaume Filion, filed under

IMDB,

automatic authorship attribution,

Python,

planktonrules,

R,

series: IMDB reviews,

machine learning.

• 23 March 2013 •

This article is neither interesting nor well written.

Everybody in the academia has a story about reviewer 3. If the words above sound familiar, you will definitely know what I mean, but for the others I should give some context. No decent scientific editor will accept to publish an article without taking advice from experts.  This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

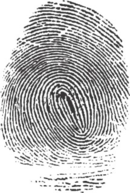

For a long time, I wondered whether there is a way to trace the identity of an author through the text of a review. What methods do stylometry experts use to identify passages from the Q source in the Bible, or to know whether William Shakespeare had a ghostwriter?

The 4-gram method

Surprisingly, the best stylistic fingerprints have little to do with literary style. For instance, lexical richness and complexity of the language are very difficult to exploit efficiently. The unconscious foibles...

Genetics and racism (3)

By Guillaume Filion, filed under

breed,

series: genetics and racism,

race,

species.

• 12 March 2013 •

In the previous posts of this series on genetics and racism, I talked about two recent academic disputes over human races. With this post I hope to give a wider overview of what biology has to say about species, breeds and races.

Darwin’s pigeons

Modern genetics was born in 1900 with the re-discovery of Mendel's laws. Since the Neolithic Revolution, genetics had been an empirical art. Our ancestors isolated most of the breeds of animals and plants that we know today, i.e. groups that carry a trait of interest to the next generation when crossed together (for instance Chihuahuas are small dogs and Great Dane are large dogs).

Modern genetics was born in 1900 with the re-discovery of Mendel's laws. Since the Neolithic Revolution, genetics had been an empirical art. Our ancestors isolated most of the breeds of animals and plants that we know today, i.e. groups that carry a trait of interest to the next generation when crossed together (for instance Chihuahuas are small dogs and Great Dane are large dogs).

But over the generations, pedigrees got lost in the myst of time and the overwhelming differences between some breeds of the same species raised the question whether they share the same natural origin. Before Darwin, it was difficult to imagine that the Chihuahuas and the Great Dane would have a common ancestor, and the theory went that breeds actually came from different species. This is actually one of the first questions tackled by Darwin in The Origin of Species. In the following passage, he exposes his...

... and academic reprints for all

By Guillaume Filion, filed under

pdftk,

pdf,

reprint,

DRM.

• 16 February 2013 •

Like many other academic journals, Molecular and Cellular Biology takes copyrights very seriously. And to trace the criminals who share scientific publications funded by public institutions, they add to the margin of the pdf reprints downloaded from their website the date and the identity of the license owner.

I recently heard that some people downloaded and installed the pdf toolkit pdftk and at the Linux terminal issued a command like the one below, where they replaced article.pdf by the name of the pdf they had downloaded.

[sourcecode:sh] pdftk article.pdf output uncompressed-article.pdf uncompress [/sourcecode]

Using their text editor, they opened the uncompressed pdf file and looked for lines like the ones below and commented them out with a % sign (or even deleted them, just in case).

[sourcecode:sh] 10 0 0 10 0 0 cm BT /R19 11 Tf 0 -1 1 0 579.5 456.847 Tm [( on some day by Institution of the Evil Person)556]TJ -94.148 0 Td [(http://mcb.asm.org/)278]TJ -89.2543 0 Td [(Downloaded from )278]TJ ET [/sourcecode]

They then ran pdftk again to fix the pdf document, and the download information was...

Genetics and racism (2)

By Guillaume Filion, filed under

racism,

genetics,

series: genetics and racism,

craniometry.

• 19 January 2013 •

In the first post of this series on genetics and racism, I explained how Richard Lewontin concluded from his work on human diversity that human races are of no value for taxonomy (the classification of living begins). This view was later criticized and even termed Lewontin's fallacy by A. W. F. Edwards. Yet, nobody ever doubted that Lewontin was honest in his approach. But more recently came another case that gives the shivers. The great Stephen Jay Gould, the author of the acclaimed Mismeasure of Man was accused of data manipulation.

The mismeasure of Gould

Stephen Jay Gould was this kind of scientist who pops up everywhere. I discovered him in a comment about the opinion of the Vatican on Evolution, others knew him for his statistical analyses of baseball records, while he was actually a paleontologist, author of the theory of punctuated equlibrium. But his most famous work is undoubtedly The Mismeasure of Man.

Like the author, the book is a strange chimera, somewhere in between scientific research and history, with a touch of lyricism. The Mismeasure of Man is a journey through the differences between people, or more precisely through the scientific discourse over this...

Genetics and racism (1)

By Guillaume Filion, filed under

crimestop,

variability,

series: genetics and racism,

genetics.

• 30 December 2012 •

Important note: Please read the Erratum at the end of the post.

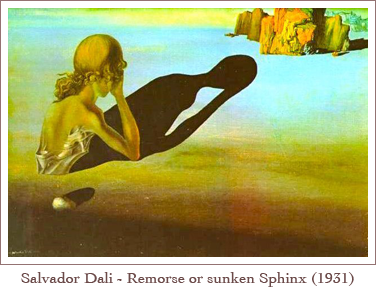

Tolstoy’s remorse

It is 1879. Leo Tolstoy, then rich and famous for War and Peace and Anna Karenina works on another kind of text.

In A Confession he explains at length that he regrets writing those novels. The focus of his remorse and his anger towards himself is the heart of his talent, this innate sense of human nature. Tolstoy's pen had no equal when it came to paint the Russian society of the time, its characters and its culture. However, he explains that this attitude towards writing is wrong, because he has been telling without preaching, he has been describing without judging. He will even abandon the royalties of War and Peace and Anna Karenina, refusing to earn money from such immoral writings.

In A Confession he explains at length that he regrets writing those novels. The focus of his remorse and his anger towards himself is the heart of his talent, this innate sense of human nature. Tolstoy's pen had no equal when it came to paint the Russian society of the time, its characters and its culture. However, he explains that this attitude towards writing is wrong, because he has been telling without preaching, he has been describing without judging. He will even abandon the royalties of War and Peace and Anna Karenina, refusing to earn money from such immoral writings.

We were all then convinced that it was necessary for us to speak, write, and print as quickly as possible and as much as possible, and that it was all wanted for the good of humanity. And thousands of us, contradicting and abusing one another, all printed and wrote — teaching others. And without noticing that we knew nothing, and that...

Unpredictable?

By Guillaume Filion, filed under

information,

probability,

random generator,

randomness.

• 13 November 2012 •

What if I told you to choose a card at random? Simply choose one from a standard deck of 52 cards, think of a card, do not draw one from a real deck... Make sure you have one in mind before you read on.

The illusion

Assuming that you have a card in mind, I bet you chose the Ace of Spades. Of course, I don't know the card you were thinking of, but that is the one I bet on. Every textbook on probability says that I have a 1/52 chance of having guessed right. Actually, that is not quite true... About one out of 4 readers will choose the Ace of Spades, and one out of seven will choose the Queen of Hearts, as shown by a study of the researcher in psychology of magic Jay Olson and his collaborators.

In this experiment, is the choice of a card purely random? And what does random mean anyway? Even if purely random is not properly defined, most would agree that it means no information at all, or completely unpredictable. The outcome of the experiment above is clearly not what you would call purely random. But...

Does the Earth have a mind?

By Guillaume Filion, filed under

Turing test,

ecology,

consciousness,

Gaia theory.

• 19 October 2012 •

The life of Jean-Dominique Bauby took a dramatic turn on Friday 8 December 1995. On that day, he had a cerebrovascular accident that left him in a coma. When he woke up twenty days later, his body did not respond, his brainstem was damaged beyond repair. Able to move only his left eyelid, Jean-Dominique patiently wrote a whole book, The Diving Bell and the Butterfly, where he describes his experience.

In the past, it was known as a “massive stroke,” and you simply died. But improved resuscitation techniques have now prolonged and refined the agony. You survive, but you survive with what is so aptly known as “locked-in syndrome.” Paralyzed from head to toe, the patient, his mind intact, is imprisoned inside his own body, unable to speak or move. In my case, blinking my left eyelid is my only means of communication.

Up until his death, three days after the publication of the book, there was no doubt for anyone that Jean-Dominique had retained every aspect of his consciousness. There was no doubt that this motionless mass was genuinely conscious.

The Gaia hypothesis

But how do we know that someone is conscious, what...

But how do we know that someone is conscious, what...

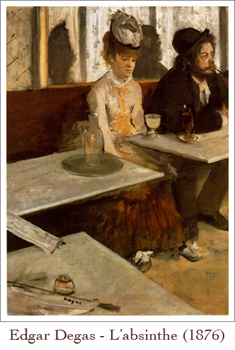

Is there a gene for alcoholism? (2)

By Guillaume Filion, filed under

information,

genetics,

genetic determinism,

neurogenetics,

series: is there a gene for alcoholism?,

missing heritability.

• 17 October 2012 •

In the post Is there a gene for alcoholism? I explained how claims to discover the gene for such and such complex behavior (mostly alcoholism and homosexuality) are based on correlations that are never confirmed by experimentation. We will have to wait until neurogenetics comes of age before we can seriously tackle this kind of question. But when that happens, how likely is it that we really discover a gene for alcoholism?

In the post Is there a gene for alcoholism? I explained how claims to discover the gene for such and such complex behavior (mostly alcoholism and homosexuality) are based on correlations that are never confirmed by experimentation. We will have to wait until neurogenetics comes of age before we can seriously tackle this kind of question. But when that happens, how likely is it that we really discover a gene for alcoholism?

To make my point come across, I will have to touch a few words about the problem of missing heritability. According to current estimates, the human genome consists of ~ 25,000 protein-coding genes and about as many non protein-coding RNAs, the function of which still remains to be established. The implicit meaning of "gene for alcoholism" is actually a mutation that would somehow affect one of these ~ 50,000 functional entities.

Mutation is somewhat inaccurate in this context as we should speak of polymorphism. A piece of our genome is monomorphic if everybody has exactly the same sequence, otherwise, it is polymorphic. The vast majority of polymorphic sequences in humans are SNPs (single-nucleotide polymorphisms), i.e. sequences that differ by only one nucleotide...

Lessons from Intelligent Design

By Guillaume Filion, filed under

crimestop,

creationism,

evolution,

intelligent design,

consciousness.

• 04 October 2012 •

The first time I heard a friend of mine — a clever guy — claim that he does not believe in Evolution, I was speechless. Over the years I realized something important: he is not alone. As much as 40% of US citizens believe in strict creationism, while only ~ 15% believe in Evolution (source: Gallup polls).

The first time I heard a friend of mine — a clever guy — claim that he does not believe in Evolution, I was speechless. Over the years I realized something important: he is not alone. As much as 40% of US citizens believe in strict creationism, while only ~ 15% believe in Evolution (source: Gallup polls).

The latest incarnation of creationism, Intelligent Design, received some media attention during the trial of the Dover Area School District. Following the annoucement that Intelligent Design will be taught side by side with Evolution at the biology classes, a group of parents sued the public school district and finally convinced the judges that this constitutes a breach of the First Amendment of the constitution.

In essence, Intelligent Design claims to build on scientific observations. The rationale of the argument is that biological organisms, human beings in particular, are too complex to be the product of chance. They are designed. And if there is a design, there must be a designer.

Crimestop

If you have read George Orwell’s novel Nineteen Eighty-Four, you will perhaps remember the fictive language Newspeak. By removing words from the English vocabulary, the powers that be gradually enclose the...