One shade of authorship attribution

By Guillaume Filion, filed under

planktonrules,

Python,

machine learning,

R,

IMDB,

series: IMDB reviews,

automatic authorship attribution.

• 23 March 2013 •

This article is neither interesting nor well written.

Everybody in the academia has a story about reviewer 3. If the words above sound familiar, you will definitely know what I mean, but for the others I should give some context. No decent scientific editor will accept to publish an article without taking advice from experts.  This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

For a long time, I wondered whether there is a way to trace the identity of an author through the text of a review. What methods do stylometry experts use to identify passages from the Q source in the Bible, or to know whether William Shakespeare had a ghostwriter?

The 4-gram method

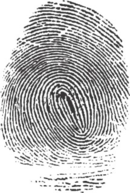

Surprisingly, the best stylistic fingerprints have little to do with literary style. For instance, lexical richness and complexity of the language are very difficult to exploit efficiently. The unconscious foibles, the recurrent mistakes and misuse of the punctuation much better betray their author because they tend to be writer invariant.

A very simple method to extract this information is to count all the 4-grams (the sequences of 4 characters) of a text. For instance the 4-grams of "to be or not to be" are 'to_b', 'o_be', '_be_', etc., and the 4-grams 'to_b', 'o_be' occur two times. The idea of this decomposition is that the most frequent words of a text will produce the most frequent 4-grams, and the most frequent mistakes will belong to several 4-grams.

In order to catch features such as punctuation errors, mis-capitalization, space omission or space doubling, it is important not to process the text in any way before collecting the 4-grams, which makes it much easier than standard Natural Language Processing (see The elements of style). Somewhat ironically, the stop words such as 'and', 'the' etc., usually filtered out for carrying no semantic content turn out to be the most informative. Every author uses the most common English words with a slightly different frequency, which then constitutes his/her fingerprint.

How good is this?

Remember planktonrules from The geometry of style? I scraped off the 4-grams from his reviews and collected the 1,000 most frequent as a feature set (planktonrules uses many double and triple spaces, and uses 'film' much more often than 'movie' contrary to most of the authors). I then used R to train a Support Vector Machine with a random selection of 10,000 reviews among a set of 50,000 and tested the model on the 40,000 remaining reviews (click on the Penrose triangle below to see the R and Python code). The accuracy of such a brutal approach is surprisingly high. The error rate is around 2%, with a false negative rate of 6.6% and a false positive rate of 0.3%.

The first script collects the 1000 most frequent 4-grams from a collection of IMDB reviews and saves them as a pickle file. You can create an input for that script from the post The elements of style. Unfold and copy the Python script folded in the Penrose triangle and run it on the dummy input that you can download from there. The dummy input consist of 10 reviews, but none of them is written by planktonrules.

# -*- coding:utf-8 -*-

import json

import sys

import pickle

from collections import defaultdict

# I have the IMDB reviews as JSON documents.

with open(sys.argv[1]) as f:

all = json.load(f)

counter = defaultdict(int)

for doc in all:

for i in xrange(len(doc['body'])-4):

counter[doc['body'][i:(i+4)]] += 1

# Pickle the 1000 most used 4-grams.

features = sorted(counter, key=counter.get, reverse=True)[:1000]

pickle.dump(features, open('features.pic', 'w'))The second script was run on two input files, one containing a random sample of IMDB reviews, the second one containing all the reviews written by planktonrules up until 2011. The output was redirected to a file called scores.txt.

# -*- coding:utf-8 -*-

import re

import sys

import json

import pickle

from collections import defaultdict

planktonrules = 'ur2467618'

features = pickle.load(open('features.pic'))

featureset = set(features)

# The script takes two file names as arguments.

with open(sys.argv[1]) as f:

all = json.load(f)

with open(sys.argv[2]) as f:

plank = json.load(f)

# Print the header.

sys.stdout.write('planktonrules\t' + \

'\t'.join([re.sub('\W', '_', f) for f in features]) + '\n')

# Each line corresponds to a review.

for doc in all + plank:

auth = 1 if doc['authid'] == planktonrules else 0

counter = defaultdict(int)

for i in xrange(len(doc['body'])-4):

key = doc['body'][i:(i+4)]

if key in featureset: counter[key] += 1

sys.stdout.write(str(auth) + '\t' + \

'\t'.join([str(counter[a]) for a in features]) + '\n')The R session to train and test a SVM is tiny, if you use the package e1701. Fitting the SVM takes a few minutes, and getting the predictions too.

library(e1071)

scores <- read.delim('scores.txt')

train <- sample(nrow(scores), 10000)

trainset <- scores[train,]

testset <- scores[-train,]

model <- svm(as.factor(planktonrules) ~ ., data=trainset)

predictions <- predict(model, newdata=testset)

mean(predictions == testset[,1])I tried several other classifiers (logistic regression, LDA, QDA and CART), but SVM always gave the best results. LDA gave a reasonable fit but the false negative rate never dropped below 15%, no matter how many 4-grams I would include. I also tried other feature sets, such as the most common 4-grams of the corpus (not necessarily used by planktonrules), and the ones for which the frequency is the most different between planktonrules and the rest of the writers but the results were not as good.

Does that mean that I can catch the author of the brilliant quote that introduced this post? Not very likely of course, because the text is very short. And also because I do not have a reference set for peer reviews. The example given above has it easy, in the sense that it is a binary classifier. Building such classifiers for a large set of authors must be substantially more difficult. But we can bet that Google has already done it. With access to your mail and everything you write in Google Docs/Google Drive, they probably have stylistic fingerprints for a large portion of the Internet community. I guess I should work on a stylistic fingerprint eraser then...

« Previous Post | Next Post »

blog comments powered by Disqus