•

the Blog

One shade of authorship attribution

By Guillaume Filion, filed under

planktonrules,

Python,

machine learning,

R,

IMDB,

series: IMDB reviews,

automatic authorship attribution.

• 23 March 2013 •

This article is neither interesting nor well written.

Everybody in the academia has a story about reviewer 3. If the words above sound familiar, you will definitely know what I mean, but for the others I should give some context. No decent scientific editor will accept to publish an article without taking advice from experts.  This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

This process, called peer review, is usually anonymous and opaque. According to an urban legend, reviewer 1 is very positive, reviewer 2 couldn't care less, and reviewer 3 is a pain in the ass. Believe it or not, the quote above is real, and it is all the review consists of. Needless to say, it was from reviewer 3.

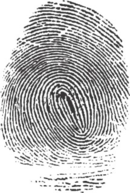

For a long time, I wondered whether there is a way to trace the identity of an author through the text of a review. What methods do stylometry experts use to identify passages from the Q source in the Bible, or to know whether William Shakespeare had a ghostwriter?

The 4-gram method

Surprisingly, the best stylistic fingerprints have little to do with literary style. For instance, lexical richness and complexity of the language are very difficult to exploit efficiently. The unconscious foibles...