•

the Blog

Unpredictable?

By Guillaume Filion, filed under

information,

randomness,

probability,

random generator.

• 13 November 2012 •

What if I told you to choose a card at random? Simply choose one from a standard deck of 52 cards, think of a card, do not draw one from a real deck... Make sure you have one in mind before you read on.

The illusion

Assuming that you have a card in mind, I bet you chose the Ace of Spades. Of course, I don't know the card you were thinking of, but that is the one I bet on. Every textbook on probability says that I have a 1/52 chance of having guessed right. Actually, that is not quite true... About one out of 4 readers will choose the Ace of Spades, and one out of seven will choose the Queen of Hearts, as shown by a study of the researcher in psychology of magic Jay Olson and his collaborators.

In this experiment, is the choice of a card purely random? And what does random mean anyway? Even if purely random is not properly defined, most would agree that it means no information at all, or completely unpredictable. The outcome of the experiment above is clearly not what you would call purely random. But...

Is there a gene for alcoholism? (2)

By Guillaume Filion, filed under

information,

genetics,

neurogenetics,

genetic determinism,

series: is there a gene for alcoholism?,

missing heritability.

• 17 October 2012 •

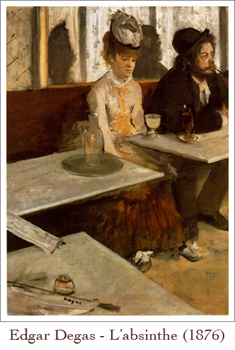

In the post Is there a gene for alcoholism? I explained how claims to discover the gene for such and such complex behavior (mostly alcoholism and homosexuality) are based on correlations that are never confirmed by experimentation. We will have to wait until neurogenetics comes of age before we can seriously tackle this kind of question. But when that happens, how likely is it that we really discover a gene for alcoholism?

In the post Is there a gene for alcoholism? I explained how claims to discover the gene for such and such complex behavior (mostly alcoholism and homosexuality) are based on correlations that are never confirmed by experimentation. We will have to wait until neurogenetics comes of age before we can seriously tackle this kind of question. But when that happens, how likely is it that we really discover a gene for alcoholism?

To make my point come across, I will have to touch a few words about the problem of missing heritability. According to current estimates, the human genome consists of ~ 25,000 protein-coding genes and about as many non protein-coding RNAs, the function of which still remains to be established. The implicit meaning of "gene for alcoholism" is actually a mutation that would somehow affect one of these ~ 50,000 functional entities.

Mutation is somewhat inaccurate in this context as we should speak of polymorphism. A piece of our genome is monomorphic if everybody has exactly the same sequence, otherwise, it is polymorphic. The vast majority of polymorphic sequences in humans are SNPs (single-nucleotide polymorphisms), i.e. sequences that differ by only one nucleotide...

Is there a gene for alcoholism? (1)

By Guillaume Filion, filed under

causality,

genetics,

series: is there a gene for alcoholism?,

independence,

information.

• 26 August 2012 •

This is usually the next thing I hear when I say that I am a geneticist. Behind this question and its variants lies a profound and natural interrogration, which could be phrased as "how much of me is the product of my genes?" I made a habit of not answering that question but instead, highlight its inaneness by lecturing people about genetics. So, for once, and exclusively on my blog, here is the tl;dr answer: no, there is not. Now comes the lecture about genetics.

I will start with mental retardation — unrelated with my opinion of those claims, really — and more precisely with the fragile X syndrome. James Watson, the co-discoverer of the structure of DNA and the pioneer of the Human Genome Project declared:

I think it was the first triumph of the Human Genome Project. With fragile X we've got just one protein missing, so it's a simple problem. So, you know, if I were going to work on something with the thought that I were going to solve it, oh boy, I'd work on fragile X.

In other words, there seems to be a gene for mental retardation. The incidence...

Lost in punctuation

By Guillaume Filion, filed under

information,

series: IMDB reviews,

information retrieval,

IMDB,

movies.

• 26 May 2012 •

What is the difference between The Shawshank Redemption and Superbabies: Baby Geniuses 2? Besides all other differences, The Shawshank Redemption is the best movie in the world and Superbabies: Baby Geniuses 2 is the worst, according to IMDB users (check a sample scene of Superbabies: Baby Geniuses 2 if you believe that the worst movie of all times is Plan 9 from Outer Space or Manos: the Hands of Fate).

IMDB users not only rank movies, they also write reviews and this is where things turn really awesome! Give Internet users the space and freedom to express themselves and you get Amazon's Tuscan whole milk or Food Network's late night bacon recipe. By now IMDB reviews have secured their place in the Internet pantheon as you can check from absolutedreck.com or shittyimdbreviews.tumblr.com. But as far as I am aware, nobody has taken this data seriously and try to understand what IMDB reviewers have to say. So let's scratch the surface.

I took a random sample of exactly 6,000 titles from the ~ 200,000 feature films on IMDB. This is less than 3% of the total, but this amount is sufficient to...

Poetry and optimality

By Guillaume Filion, filed under

information,

independence,

probability.

• 21 May 2012 •

Claude Shannon was the hell of a scientist. His work in the field of information theory, (and in particular his famous noisy channel coding theorem) shaped the modern technological landscape, but also gave profound insight in the theory of probabilities.

In my previous post on statistical independence, I argued that causality is not a statistical concept, because all that matters to statistics is the sampling of events, which may not reflect their occurrence. On the other hand, the concept of information fits gracefully in the general framework of Bayesian probability and gives a key interpretation of statistical independence.

Shannon defines the information of an event with probability $(Prob(A))$ as $(-\log P(A))$. For years, this definition baffled me for its simplicity and its abstruseness. Yet it is actually intuitive. Let us call $(\Omega)$ the system under study and $(\omega)$ its state. You can think of $(\Omega)$ as a set of possible messages and of $(\omega)$ as the true message transmitted over a channel, or (if you are Bayesian) of $(\Omega)$ as a parameter set and $(\omega)$ as the true value of the parameter. We have total information about the system if we know $(\omega)$. If instead, all...

The fallacy of (in)dependence

By Guillaume Filion, filed under

information,

causality,

probability,

independence.

• 04 May 2012 •

In the post Why p-values are crap I argued that independence is a key assumption of statistical testing and that it almost never holds in practical cases, explaining how p-values can be insanely low even in the absence of effect. However, I did not explain how to test independence. As a matter of fact I did not even define independence because the concept is much more complex than it seems.

Apart from the singular case of Bayes theorem, which I referred to in my previous post, the many conflicts of probability theory have been settled by axiomatization. Instead of saying what probabilities are, the current definition says what properties they have. Likewise, independence is defined axiomatically by saying that events $(A)$ and $(B)$ are independent if $(P(A \cap B) = P(A)P(B))$, or in English, if the probability of observing both is the product of their individual probabilities. Not very intuitive, but if we recall that $(P(A|B) = P(A \cap B)/P(B))$, we see that an alternative formulation of the independence of $(A)$ and $(B)$ is $(P(A | B) = P(A))$. In other words, if $(A)$ and $(B)$ are independent, observing...