The curse of large numbers (Big Data considered harmful)

By Guillaume Filion, filed under

statistics,

hypothesis testing,

big data,

p-values.

• 10 February 2018 •

According to the legend, King Midas got the sympathy of the Greek god Dionysus who offered to grant him a wish. Midas asked that everything he touches would turn into gold. At first very happy with his choice, he realized that he had brought on himself a curse, as his food turned into gold before he could eat it.

According to the legend, King Midas got the sympathy of the Greek god Dionysus who offered to grant him a wish. Midas asked that everything he touches would turn into gold. At first very happy with his choice, he realized that he had brought on himself a curse, as his food turned into gold before he could eat it.

This legend on the theme “be careful what you wish for” is a cautionary tale about using powers you do not understand. The only “powers” humans ever acquired were technologies, so one can think of this legend as a warning against modernization and against the fact that some things we take for granted will be lost in our desire for better lives.

In data analysis and in bioinformatics, modernization sounds like “Big Data”. And indeed, Big Data is everything we asked for. No more expensive underpowered studies! No more biased small samples! No more invalid approximations! No more p-hacking! Data is good, and more data is better. If we have too much data, we can always throw it away. So what can possibly go wrong with Big Data?

Enter the Big Data world and everything you touch turns into gold. The statistical tests are significant, the asymptotic approximations are robust, and estimations are accurate. But like King Midas, you should be careful because the price for Big Data is the “curse of large numbers”.

Nowadays most students learn that statistical tests have two possible outcome usually referred to as significant and not significant. But in the Big Data world, every statistical test is always significant. Now stop. Rewind. Read this again. “Every statistical test is always significant”... Wait, did I just write that statistical testing on Big Data makes no sense? Fortunately, I did not. But statistical tests on Big Data can be harmful if you do not understand how they work.

Back to basics: the null hypothesis

As a beginner in statistics, I remember being confused about one important thing. Every statistical test has a null hypothesis on the format “the correlation between the variables is 0”. Because statistics are approximate, I understood such hypotheses as “the correlation between the variables is approximately 0”. As it turns out, this has been the most harmful misconception I could have. Indeed, with this in mind, I thought that rejecting the null hypothesis meant rejecting the fact that the correlation is small, and therefore that it must be large (in absolute value). I was comforted in this opinion by the fact that the correlation was called significant and I thought that a lower p-value meant a higher correlation (in absolute value).

As a beginner in statistics, I remember being confused about one important thing. Every statistical test has a null hypothesis on the format “the correlation between the variables is 0”. Because statistics are approximate, I understood such hypotheses as “the correlation between the variables is approximately 0”. As it turns out, this has been the most harmful misconception I could have. Indeed, with this in mind, I thought that rejecting the null hypothesis meant rejecting the fact that the correlation is small, and therefore that it must be large (in absolute value). I was comforted in this opinion by the fact that the correlation was called significant and I thought that a lower p-value meant a higher correlation (in absolute value).

In reality, the null hypothesis should be read “the correlation between the variables is exactly 0”. Accepting the null hypothesis when the correlation is 0.00001 is a statistical failure. The test is designed to reject the null hypothesis in this case, but will often fail to do so for lack of statistical power. The fact that one may tolerate this failure does not make it a success.

Now, the statistical power is essentially a function of the size of the data set. In simpler words, with more data points we can notice smaller differences. If the correlation is 0.00001, there is a data set large enough so that the null hypothesis will almost certainly be rejected. This is how statistical tests are designed.

Back to my initial misconception, this means that rejecting the null does not say anything about the value of the correlation. “At least we know it is not 0” some will argue. And they are right. But did we really believe it could have been the case? Did we really believe this? Did we really believe that the variables have been sampled with perfect independence? Did they have exactly the same distribution? Did the numbers have infinite precision? Was the correlation exactly 0? Of course not. Anyone who has seen a real world data set knows that this is never the case.

Batch effects, outliers, sampling biases and experimental artifacts are in every data set. Most of the time they are invisible on small data sets just because the effects are small. With Big Data they are equally small, but this time they are visible and they tilt the balance towards rejecting the null. This is the curse of large numbers. Big Data opens a can of worms that we did not have to care about before.

Why is Big Data harmful?

Let us see how a naive investigator can be fooled by the curse of large numbers. Alan has reasons to believe that the (fictitious) gene KAPPA1 has something to do with cancer. He downloads a huge data set from cancer patients and sets up a Student’s t test. “I knew it”, he thinks when he sees that the test is significant. KAPPA1 expression is lost in patients with the most aggressive cancers.

“So the role of KAPPA1 may be to protect against cancer...” Alan speculates. “If this is the case, then it should be the reverse for KAPPA2, which is known to inhibit KAPPA1.” Alan sets up a Student’s t test and confirms with great satisfaction that the expression of KAPPA2 is increased in aggressive cancers.

As Alan writes up a manuscript about the fundamental role of KAPPA1 and KAPPA2 in cancer, let us think about what just happened. What was the probability that Alan would confirm that KAPPA1 has anything to do with cancer? With his approach, we can assume that the probability is close to 1. Since the data set is large, he will find either more or less KAPPA1 in the most aggressive cancers, but not exactly the same. Since both count as “having something to do with cancer”, Alan would follow up on KAPPA1 either way.

Next, what is the probability that KAPPA2 is more expressed in aggressive cancers? Without more information, we can say that this probability is approximately 0.5. In large data sets, genes either go up or go down. They cannot remain exactly constant. If Alan had found more KAPPA1 in aggressive cancers, he would have formulated the opposite hypothesis for KAPPA2, and he would still validate it with probability 0.5.

Next, what is the probability that KAPPA2 is more expressed in aggressive cancers? Without more information, we can say that this probability is approximately 0.5. In large data sets, genes either go up or go down. They cannot remain exactly constant. If Alan had found more KAPPA1 in aggressive cancers, he would have formulated the opposite hypothesis for KAPPA2, and he would still validate it with probability 0.5.

Alan could have started with any irrelevant pair of genes; in half of the cases he would come up with the same conclusion. In the other half of the cases, he would conclude that KAPPA1 and KAPPA2 have similar effects on cancer. We cannot know what he would do with this information, but chances are that he would come up with an explanation (for instance that KAPPA2 inhibits KAPPA1 but also prevents cancer) and continue with his initial model.

Alan always wins, even with false hypotheses. If he is not aware of this, then the chances are that his study is plain crackpot.

OK, but what do we do about it?

The curse of large numbers is a very severe problem, but the good news is that the fix is not very hard. It is important to realize that Alan never cares about anything else than statistical tests and their p-values. This is what puts him at risk. Fortunately, p-values are far from being the only tool in the statistical toolbox.

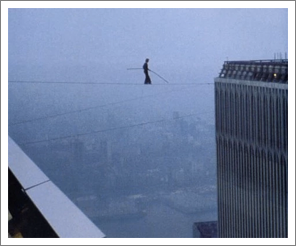

Breaking the curse is as simple as using Big Data for estimation instead of testing. The same statistical power that gives tiny p-values all the time can be used to get very accurate estimations. Instead of asking “is the correlation exactly 0?”, why not ask “what is the value of the correlation?”. It’s that simple, really.

For instance, compare “the correlation is significantly different from zero (P < 0.001)” with “the correlation is between 0.002 and 0.008 with 99.9% confidence”. The first statement comes from a statistical test and it intuitively suggests that the variables are correlated. The second comes from an interval estimation with the same data and it suggests that this correlation is unlikely to be higher than 0.008, which is totally negligible in most practical situations.

Which way you do it does not matter so much, as long as you try to box the most important variables of your problem within quantitative bounds. For instance, confidence intervals work just fine. In R they are even in the default output of Student’s t test, investigators just do not pay attention to them. In general, obtaining a confidence interval is not that difficult.

Conclusion

Big Data won’t fool the investigators who care for the magnitude of the effects more than the significance of the tests. By rerouting the statistical power that yields meaningless significant p-values, we can obtain tight bounds on interval estimates instead. With this knowledge, we can quickly decide that a phenomenon is not worth following up on, we can understand the data better and identify the large effects, which are always those giving the most leverage for action.

The statistical community is aware of this problem (and many others) associated with the prevalence of p-values. The American Statistical Association has recently encouraged statisticians to propose alternatives to p-values. A very insightful discussion about this can be found on Cross-Validated.

« Previous Post | Next Post »

blog comments powered by Disqus