The geometry of style

By Guillaume Filion, filed under

planktonrules,

co-inertia analysis,

information retrieval,

IMDB,

principal component analysis,

series: IMDB reviews.

•

•

This is it! I have been preparing this post for a very long time and I will finally tell you what is so special about IMDB user 2467618, also known as planktonrules. But first, let me take you back where we left off in this post series on IMDB reviews.

In the first post I analyzed the style of IMDB reviews to learn which features best predict the grade given to a movie (a kind of analysis known as feature extraction). Surprisingly, the puncutation and the length of the review are more informative than the vocabulary. Reviews that give a medium mark (i.e. around 5/10) are longer and thus contain more full stops and commas.

Why would reviewers spend more time on a movie rated 5/10 than on a movie rated 10/10? There is at least two possibilities, which are not mutually exclusive. Perhaps the absence of a strong emotional response (good or bad) makes the reviewer more descriptive. Alternatively, the reviewers who give extreme marks may not be the same as those who give medium marks. The underlying question is how much does the style of a single reviewer change with his/her mood? Is this enough to explain this trend?

I first thought it would be impossible to answer this question. This is where I noticed that a user had reviewed 7.5% of the movies of my random sample. If you are not impressed by this figure, let me tell you more about planktonrules. Last time I checked, planktonrules had written 12,741 IMDB reviews. This is three times the size of the full Harry Potter series. The deluge starts in 2005 (outside a single isolated review in 2003) and never stops until now. That's an average of 1820 reviews per year, or 5 reviews every day for 7 years. Even more impressive is the time it takes to actually watch 5 movies every single day for such a long time. In any event, such a massive sample size makes it possible to study how much the style of a single writer can vary.

But how do we do that? In the second post of this series I explained how a text (actually a bag of words) can be seen as a point in a space of very high dimension. Yet, for most people, visualizing objects in 500 dimensions is hard. This is why the statistician's toolkit contains methods known as dimension reduction techniques to give approximate representations of those objects in two dimensions, which can be thought of as casting a shadow. The shadow of an object is a two dimensional representation of its real shape. Depending on where the light comes from, the shadow will be more or less faithful to its original shape. The masterpieces of the shadow artist Shigeo Fukuda illustrate this better than I can with words. Under a specific light, his unfamiliar creations cast a familiar shadow; a heap of rubbish becomes two lovers, a random mass of forks and knives becomes a motorbike.

While Plato, through his allegory of the cave, invites us to watch real objects, statisticians and Shigeo Fukuda passionately observe the shadows. Most dimension reduction techniques find "interesting" shadows of high dimensional objects. Among those, the most standard is the principal component analysis (PCA), which gives the shadow closest to the original shape. As illustrated by the work of Shigeo Fukuda, those shadows are not always the most interesting. An uncelebrated alternative to PCA, known as co-inertia analysis (CIA) gives another kind of shadow. I will come back to CIA in the future, but for now I will only say that it finds the shadows of two objects that best preserve their relationship.

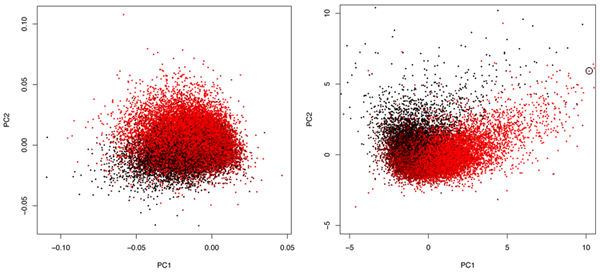

I illustrate the difference between PCA and CIA in the figure below. I have projected (i.e. cast the shadows of) the 29,415 IMDB reviews of my random sample with both methods. Each dot is a review. Two dots are close if and only if they represent similar bags of words, i.e. if the reviews use similar words. I drew the dots in black if the review ranks the movie 1/10, in red if it ranks it 10/10, and used a black-to-red gradient for intermediate marks. As you can see, the shapes of the clouds are quite distinct: the PCA cloud is a comet while the CIA cloud is more like a V. More interesting is the fact that colors overlap to a large extent with PCA, while they are much better separated with CIA. This is because the CIA cloud best preserves the relationship between bag of words and the mark given to the movie.

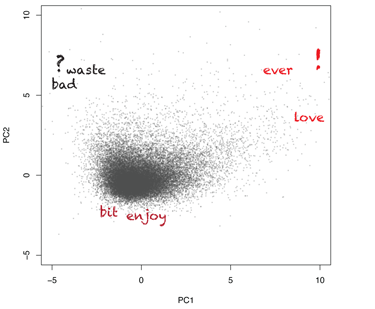

Not only does CIA (or PCA) separate the reviews, it also highlights which words influence their location in the shadow. In the figure below I show some of the most informative words along with their influence on the geometry of the cloud. I drew the reviews in pale grey to highlight the position of the words relative to the cloud. Reviews projected close to a word will often contain this word, so that words close together are often found in the same reviews.

It is again striking that punctuation marks such as the question mark and the exclamation mark are very informative. While the question mark is clearly associated to poor grades, the overuse of the exclamation mark is used almost exclusively when the movie is ranked 10/10. To give you an idea, I drew a black circle around one of the reviews closest the the exclamation mark in the right panel of the first figure (top right corner), of which I reproduce the text below.

I dont like boxing...much, myself, and when I saw this movie, I can only say It Kicked Azz!!! It was totally awesome! And if I am the type of person whom does not like boxing, then any of you others who dont like boxing much either, really should see this!! The story line..... everything, just was real good! They did a GREAT JOB!!!

That's two exclamation marks per sentence. But the most extreme review of the genre was so far off the cloud that it did not fit on the figure. It is entitled "what i think of the movie!!!!" and here it is:

i love this movie so much!!!!!!!!!!!!!! it is one of my favorite movies!!!!!!!!!!!!!!!! all the hot guys in this film and the story how they made it like our time!!!!!!!!!!! it was unbelievable!!!!!!!!!!everything was just so great and everything!!!!!!!!!!!!!!! i want this movie so badly!!!! i've watched it 200 time when i borrowed it from a friend!!!!!!!!!!!!! they picked the right actors for this movie!! everything was good from the beginning to the end!!!!!!!!!!!!!! i give this movie a 10!!!!!!!!!!!!!!! it was so good!!!!!!!!!!!!!!!!! it caught my attention from the minute it started!!!!!!!!!!! i give this movie a good rating!!!!!!!

Well, that's what I call a true Romeo + Juliet fan!!! But let us return to our original question. We now have enough elements to measure the variation of style of a single author and compare it to the global variation of style on IMDB.

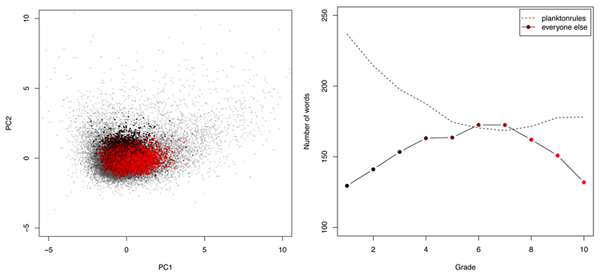

In the left panel of the figure below I have overlayed all the reviews of planktonrules (in color) on top of the reviews of everyone else (in pale grey). The color scheme is the same as the rest: black and red dots have the same arrangement because planktonrules uses the same words as everyone else to describe a good movie, or a bad movie. However, it is clear that the cloud of planktonrules' reviews is much more compact than the global cloud, which means that the style of planktonrules does not explore the full spectrum of IMDB styles. In particular, it never goes to the "omg" style of the examples shown above. The right panel shows the number of words in a review against the mark, either for all the reviews together or planktonrules' reviews alone. It is clear that the trends go in opposite directions, in general planktonrules writes longer reviews for lower marks. This suggests that the style varies substantially in relation to the appreciation of the movie, but that the bulk of style variation on IMDB comes from intrinsic differences of styles between users.

It took an epic IMDB reviewer, a forgotten statistical method and a shadow artist, but we finally came to an answer. I may still post about a few more gems that I found down the IMDB mine, in any event we now have a picture of what is going on there.